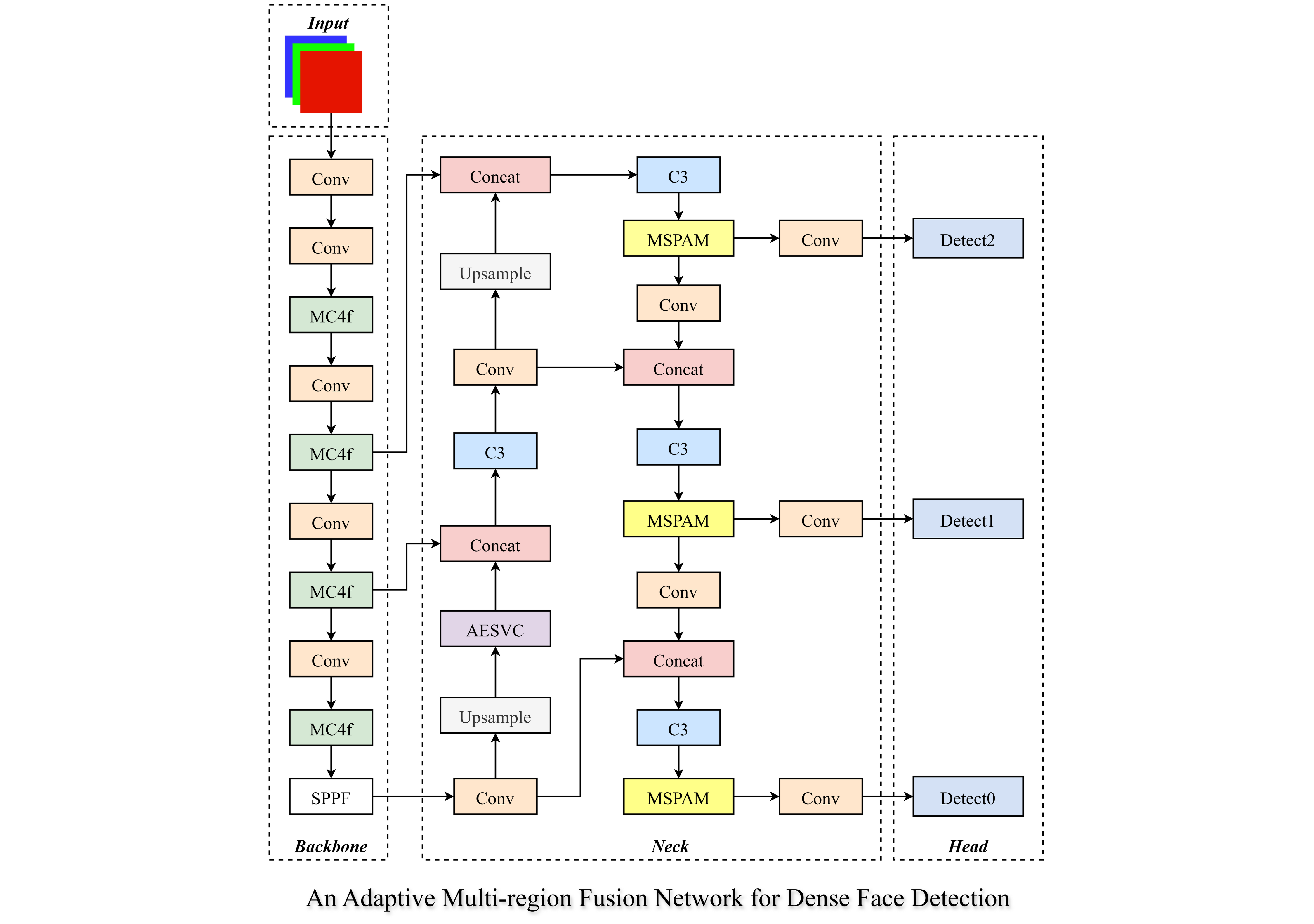

An Adaptive Multi-region Fusion Network for Dense Face Detection

Downloads

In recent years, face detection has been widely applied in various intelligent monitoring systems. However, missed detections and low detection accuracy present challenges, such as small, blurred, and occluded faces in multi-face detection scenarios. To address these challenges, an adaptive multi-region fusion network is designed for dense face detection. First, in the shallow layers of the network, a multi-scale cross-stage fusion (MC4f) module is designed to replace the C3 module, which solves the issue of gradient explosion or disappearance in deep networks and promotes the effective convergence of the network on small datasets. An adaptive fusion explicit spatial vision centre (AESVC) is then designed between the backbone and neck networks to adaptively fuse local and global features to refine face information and enhance feature representation capabilities in complex tasks. Subsequently, a multi-scale parallel attention mechanism (MSPAM) is proposed to enhance the cross-scale fusion of facial features and reduce the loss of shallow features. Finally, to achieve accurate facial key point localisation and alignment, wing loss and A-loss functions are integrated, which balances the relationship between easy and difficult samples. Compared with the original model, the proposed model increases the mean average precision (mAP) by 1.75, 2.01, and 3.06% for easy, medium, and hard samples, respectively. The experimental results prove the effectiveness of the adaptive multi-region fusion network for dense face detection.

Alansari, M., Hay, O. A., Javed, S., Shoufan, A., Zweiri, Y., & Werghi, N. (2023). Ghostfacenets: Lightweight face recognition model from cheap operations. IEEE Access, 11, 35429-35446.

Alzubaidi, L., Zhang, J., Humaidi, A. J., Al-Dujaili, A., Duan, Y., Al-Shamma, O., Santamaría, J., Fadhel, M. A., Al-Amidie, M., & Farhan, L. (2021). Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. Journal of Big Data, 8, 1-74.

Ben, X., Ren, Y., Zhang, J., Wang, S.-J., Kpalma, K., Meng, W., & Liu, Y.-J. (2021). Video-based facial micro-expression analysis: A survey of datasets, features and algorithms. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(9), 5826-5846.

Cardona-Pineda, D. S., Ceballos-Arias, J. C., Torres-Marulanda, J. E., Mejia-Muoz, M. A., & Boada, A. (2022). Face Recognition-Eigenfaces, 373-397.

Chen, W., Huang, H., Peng, S., Zhou, C., & Zhang, C. (2021). YOLO-face: a real-time face detector. The Visual Computer, 37, 805-813.

Chitraningrum, N., Banowati, L., Herdiana, D., Mulyati, B., Sakti, I., Fudholi, A., Saputra, H., Farishi, S., Muchtar, K., & Andria, A. (2024). Comparison Study of Corn Leaf Disease Detection based on Deep Learning YOLO-v5 and YOLO-v8. Journal of Engineering and Technological Sciences, 56(1), 61-70.

Debbouche, N., Ouannas, A., Batiha, I. M., Grassi, G., Kaabar, M. K. A., Jahanshahi, H., Aly, A. A., & Aljuaid, A. M. (2021). Chaotic Behavior Analysis of a New Incommensurate Fractional-Order Hopfield Neural Network System. Complexity(Pt.31), 2021.

Deng, J., Guo, J., Zhou, Y., Yu, J., Kotsia, I., & Zafeiriou, S. (2019). Retinaface: Single-stage dense face localisation in the wild. arXiv preprint arXiv:1905.00641.

Feng, Z.-H., Kittler, J., Awais, M., Huber, P., & Wu, X.-J. (2018). Wing loss for robust facial landmark localisation with convolutional neural networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2235-2245.

Freitas, R. T., Aires, K. R. T., Paiva, A. C., Rodrigo, D. M. S. V., & Soares, P. L. M. (2024). A CNN-based Multi-Level Face Alignment Approach for Mitigating Demographic Bias In Clinical Populations. Computational Statistics, 39(5).

Gao, S., Wu, R., Wang, X., Liu, J., Li, Q., & Tang, X. (2023). EFR-CSTP: Encryption for face recognition based on the chaos and semi-tensor product theory. Information Sciences, 621, 766-781.

Gong, Y., Yu, X., Ding, Y., Peng, X., Zhao, J., & Han, Z. (2021). Effective fusion factor in FPN for tiny object detection. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 1160-1168.

Guo, J., Deng, J., Lattas, A., & Zafeiriou, S. (2021). Sample and computation redistribution for efficient face detection. arXiv preprint arXiv:2105.04714.

Hannuksela, J. (2022). Facial Feature Based Head Tracking and Pose Estimation. Department of Electrical & Information Engineering, 7(2), 122.

He, J., Song, X., Feng, Z., Xu, T., Wu, X., & Kittler, J. (2023). ETM-face: effective training sample selection and multi-scale feature learning for face detection. Multimedia Tools and Applications, 82(17), 26595-26611.

Hioual, A., Ouannas, A., Oussaeif, T. E., Grassi, G., Batiha, I., & Momani, S. (2022). On Variable-Order Fractional Discrete Neural Networks: Solvability and Stability. Fractal and Fractional, 6(2), 119.

Hioual, A., Oussaeif, T. E., Ouannas, A., Grassi, G., Batiha, I. M., & Momani, S. (2022). New results for the stability of fractional-order discrete-time neural networks. Alexandria Engineering Journal, 61(12), 10359-10369.

Imran, A., Ahmed, R., Hasan, M. M., Ahmed, M. H. U., Azad, A. K. M., & Alyami, S. A. (2024). FaceEngine: A Tracking-Based Framework for Real-Time Face Recognition in Video Surveillance System. SN Computer Science, 5(5), 609.

Jiang, P., Ergu, D., Liu, F., Cai, Y., & Ma, B. (2022). A Review of Yolo algorithm developments. Procedia computer science, 199, 1066-1073.

Jocher, G., Chaurasia, A., Stoken, A., Borovec, J., Kwon, Y., Michael, K., Fang, J., Wong, C., Yifu, Z., & Montes, D. (2022). ultralytics/yolov5: v6. 2-yolov5 classification models, apple m1, reproducibility, clearml and deci. ai integrations. Zenodo.

Kobylkov, D., & Vallortigara, G. (2024). Face detection mechanisms: Nature vs. nurture. Frontiers in Neuroscience, 18, 1404174.

Li, H., Zhao, Y., Mao, Z., Qin, Y., Xiao, Z., Feng, J., Gu, Y., Ju, W., Luo, X., & Zhang, M. (2024). A survey on graph neural networks in intelligent transportation systems. arXiv preprint arXiv:2401.00713.

Li, J., Wang, Y., Wang, C., Tai, Y., Qian, J., Yang, J., Wang, C., Li, J., & Huang, F. (2019). DSFD: dual shot face detector. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5060-5069.

Liu, S., Qi, L., Qin, H., Shi, J., & Jia, J. (2018). Path aggregation network for instance segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition, 8759-8768.

Liu, X., Qi, P., Siarry, P., Hua, D., Ma, Z., Guo, X., Kochan, O., & Li, Z. (2023). Mining security assessment in an underground environment using a novel face recognition method with improved multiscale neural network. Alexandria Engineering Journal, 80, 217-228.

Liu, X., Zhang, S., Hu, J., & Mao, P. (2024). ResRetinaFace: an efficient face detection network based on RetinaFace and residual structure. Journal of Electronic Imaging, 33(4).

Liu, Y., Wang, F., Deng, J., Zhou, Z., Sun, B., & Li, H. (2022). Mogface: Towards a deeper appreciation on face detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 4093-4102.

Liu, Z., Ou, J., Huo, W., Yan, Y., & Li, T. (2022). Multiple feature fusion‐based video face tracking for IoT big data. International Journal of Intelligent Systems, 37(12), 10650-10669.

Ma, J., Li, X., Li, J., Wan, J., Liu, T., & Li, G. (2024). Quality-aware face alignment using high-resolution spatial dependencies. Multimedia Tools & Applications, 83(14).

Naseri, R. A. S., Kurnaz, A., & Farhan, H. M. (2023). Optimized face detector-based intelligent face mask detection model in IoT using deep learning approach. Applied Soft Computing, 134, 109933.

Qi, D., Tan, W., Yao, Q., & Liu, J. (2022). YOLO5Face: Why reinventing a face detector. European Conference on Computer Vision, 228-244.

Qin, Z., Zhang, P., Wu, F., & Li, X. (2021). Fcanet: Frequency channel attention networks. Proceedings of the IEEE/CVF international Conference on Computer Vision, 783-792.

Quan, Y., Zhang, D., Zhang, L., & Tang, J. (2023). Centralized feature pyramid for object detection. IEEE Transactions on Image Processing, 32, 4341-4354.

Rahmad, C., Asmara, R. A., Putra, D., Dharma, I., Darmono, H., & Muhiqqin, I. (2020). Comparison of Viola-Jones Haar Cascade classifier and histogram of oriented gradients (HOG) for face detection. IOP Conference Series: Materials Science and Engineering, 012038.

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in Neural Information Processing Systems, 28.

Saadabadi, M. S. E., Malakshan, S. R., Dabouei, A., & Nasrabadi, N. M. (2024). ARoFace: Alignment Robustness to Improve Low-Quality Face Recognition. European Conference on Computer Vision, 308-327.

Sharma, D. (2021). Information Measure Computation and its Impact in MI COCO Dataset. 2021 7th International Conference on Advanced Computing and Communication Systems, 1964-1969.

Tootell, R. B., Hadjikhani, N., Hall, E. K., Marrett, S., Vanduffel, W., Vaughan, J. T., & Dale, A. M. (1998). The retinotopy of visual spatial attention. Neuron, 21(6), 1409-1422.

Wang, C.-Y., Liao, H.-Y. M., Wu, Y.-H., Chen, P.-Y., Hsieh, J.-W., & Yeh, I.-H. (2020). CSPNet: A new backbone that can enhance learning capability of CNN. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 390-391.

Wang, C., & Deng, W. (2021). Representative forgery mining for fake face detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14923-14932.

Woo, S., Park, J., Lee, J.-Y., & Kweon, I. S. (2018). Cbam: Convolutional block attention module. Proceedings of the European Conference on Computer Vision, 3-19.

Wu, Y., & He, K. (2018). Group normalization. Proceedings of the European conference on computer vision, 3-19.

Xiang, J., & Zhu, G. (2017). Joint face detection and facial expression recognition with MTCNN. 2017 4th International Conference on Information Science and Control Engineering, 424-427.

Xie, X., Cheng, G., Wang, J., Yao, X., & Han, J. (2021). Oriented R-CNN for object detection. Proceedings of the IEEE/CVF International Conference on Computer Vision, 3520-3529.

Xu, H., Wang, L., & Chen, F. (2024). Advancements in Electric Vehicle PCB Inspection: Application of Multi-Scale CBAM, Partial Convolution, and NWD Loss in YOLOv5. World Electric Vehicle Journal, 15(1), 15.

Yang, L., Zhang, R.-Y., Li, L., & Xie, X. (2021). Simam: A simple, parameter-free attention module for convolutional neural networks. International Conference on Machine Learning, 11863-11874.

Yashunin, D., Baydasov, T., & Vlasov, R. (2020). MaskFace: multi-task face and landmark detector. arXiv preprint arXiv:2005.09412.

Yu, Z., Huang, H., Chen, W., Su, Y., Liu, Y., & Wang, X. (2022). Yolo-facev2: A scale and occlusion aware face detector. arXiv preprint arXiv:2208.02019.

Zhang, B., Li, J., Wang, Y., Tai, Y., Wang, C., Li, J., Huang, F., Xia, Y., Pei, W., & Ji, R. (2020). Asfd: Automatic and scalable face detector. arXiv preprint arXiv:2003.11228.

Zhang, S., Chi, C., Lei, Z., & Li, S. Z. (2020). Refineface: Refinement neural network for high performance face detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(11), 4008-4020.

Zhang, S., Zhu, X., Lei, Z., Shi, H., Wang, X., & Li, S. Z. (2017a). Faceboxes: A CPU real-time face detector with high accuracy. 2017 IEEE International Joint Conference on Biometrics, 1-9.

Zhang, S., Zhu, X., Lei, Z., Shi, H., Wang, X., & Li, S. Z. (2017b). S3fd: Single shot scale-invariant face detector. Proceedings of the IEEE International Conference on Computer Vision, 192-201.

Zhu, Y., Cai, H., Zhang, S., Wang, C., & Xiong, Y. (2020). Tinaface: Strong but simple baseline for face detection. arXiv preprint arXiv:2011.1318.

Copyright (c) 2025 Journal of Engineering and Technological Sciences

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.